Article

Frictionless Fake Account Detection: How to Get it Right

Uros Pavlovic

June 5, 2025

Fake account detection has evolved far beyond filtering spam usernames or blocking suspicious IPs. Today’s threats involve well-crafted synthetic identities, built to pass surface-level onboarding checks and behave like real users. These accounts are engineered, often using AI-driven automation, to bypass verification systems at scale and exploit digital platforms for financial gain.

Generative AI will enable $40 billion in losses by 2027 up from $12.3 billion in 2023; that's according to Deloitte Center for Financial Services.

It was also reported that "top U.S. watchdog for consumer financial protection said Tuesday it would fine Ohio lender Fifth Third Bank (FITB.O), opens new tab $20 million for allegedly opening fake customer accounts and forcing auto insurance on consumers who already had coverage, among other allegations," (Source: Reuters).

The accessibility of generative AI and low-cost automation tools compounds this growing wave of impersonation attacks. Fraudsters can now generate realistic contact details, mimic user behavior, and create synthetic identities that slip through traditional Know Your Customer (KYC) protocols. To stay ahead, organizations need detection mechanisms that analyze deeper signals; invisibly, silently, and in real time.

What are synthetic identities, and why are they different?

Synthetic identities can also be described as ‘constructed’ identities. Fraudsters blend real and fake information to create accounts that look legitimate but belong to no actual person. These identities often use valid addresses, plausible names, and functioning contact details, like a recently registered email and a VoIP phone number, to bypass onboarding checks designed for stolen identity detection.

Unlike traditional fraud tactics that rely on compromised credentials, synthetic accounts are built gradually. A user profile might sit dormant for weeks, pass basic verification, and slowly build trust before being activated in a targeted attack. This makes them much harder to spot using surface-level validation or rigid KYC filters. The impact of synthetic and fake accounts spans multiple industries:

- Banking and fintech

Fraudsters use synthetic identities or stolen KYC data to open accounts for laundering money, initiating mule operations, or funneling proceeds from scams. - Lending and BNPL (Buy Now, Pay Later)

Fake users apply for small loans or BNPL installments, with no intention to repay, leading to direct financial loss and skewed credit risk metrics. - E-commerce

Fake accounts exploit refund and return policies using virtual cards or manipulated shipping details. Others use stolen credentials to commit payment fraud with no traceable identity. - Travel and hospitality

Fake bookings under fake names clog availability, especially when free cancellations are allowed. Meanwhile, malicious agents leave fraudulent reviews to damage competitors or manipulate ratings. - iGaming and betting platforms

Bonus abuse is rampant, with fake profiles repeatedly signing up to claim welcome credits or referral rewards, often using disposable phones and synthetic emails. - Online marketplaces and classifieds

Scammers use fake accounts to stage fake listings, coordinate scams, or defraud buyers and sellers with fraudulent interactions.

Across each sector, fake account creation erodes trust, inflates operational costs, and introduces new regulatory risks, especially in regions tightening digital identity requirements. What is the only effective way to stop this? Start detection earlier, when contact data is first submitted; in other words, before the account has a chance to cause harm.

Key signals that help catch fake accounts before they go live

Sophisticated fake accounts are designed to pass surface-level checks, but they rarely stand up to deeper signal-based analysis. When phone numbers, emails, IP addresses, and domains are evaluated in context, subtle red flags emerge. Here’s how these signals help uncover inauthentic behavior before it enters your platform.

1. Phone Number Analysis

While a phone number may ring and appear valid, subtle attributes reveal risk:

- Disposable or temporary numbers linked to mass-issuance services are a major red flag.

- VoIP numbers can be legitimate but are often used by fraudsters to obscure geography and identity.

- Recently issued or frequently ported numbers indicate low trust history and are commonly used to cycle through fake registrations.

These attributes, when analyzed against a broader signal set, help flag synthetic patterns before interaction begins.

Not all emails are equal, and trustworthiness can often be inferred from their metadata:

- Newly registered domains or uncommon domain extensions are a common trait of throwaway emails.

- No usage history (no prior signups, no digital footprint) raises questions about authenticity.

- Breach history involving the email can reveal if the account was created using compromised data.

Rather than blocking users outright, systems can assign dynamic risk scores to help decide when intervention is warranted.

3. Connection and IP data analysis

Network behavior tells a deeper story about a user’s intent and origin:

- Proxy, VPN, or TOR usage often masks true location and device attributes.

- Hosting provider IPs or cloud infrastructure hints at bot-driven or automated account creation.

- Geolocation mismatches between the IP and declared user location may expose synthetic patterns or unauthorized access attempts.

Connection-based signals are particularly useful because they are hard to fake consistently across sessions.

4. Domain Intelligence (for business accounts)

Some fake accounts operate under the guise of a business, but weak or mismatched domain signals often give them away:

- The domain may exist but lack basic infrastructure like MX records, SSL certificates, or a credible website.

- No online presence or suspicious WHOIS metadata can flag attempts to spoof real businesses.

- If the domain links to unrelated entities or overlaps with blacklisted actors, deeper scrutiny is warranted.

Domain signals offer an additional verification layer for KYB-like use cases, especially when dealing with vendors, merchants, or marketplaces.

5. Combined signal evaluation

The real power comes when signals are interpreted holistically. A phone number might seem fine, and an email might be deliverable, but viewed together, they might reveal coordinated behavior:

- Same phone-email pattern used across dozens of unrelated signups.

- Mismatched country origins between email registration and phone carrier.

- Shared IP fingerprints across accounts with otherwise “clean” contact info.

This layered evaluation builds a trust profile based on behavior and context, not just data accuracy, which is crucial for detecting fraud that would otherwise pass traditional KYC.

How Trustfull flags fake accounts via cross-referenced signals

Most fake accounts aren’t built to be obvious. A disposable phone number can still receive calls. A freshly minted email address can still deliver messages. What makes them suspicious is rarely a single trait — it’s how these traits connect.

Imagine this scenario where a new user signs up on a lending platform using the following details:

- A phone number that rings and returns a valid carrier.

- An email address that passes deliverability checks.

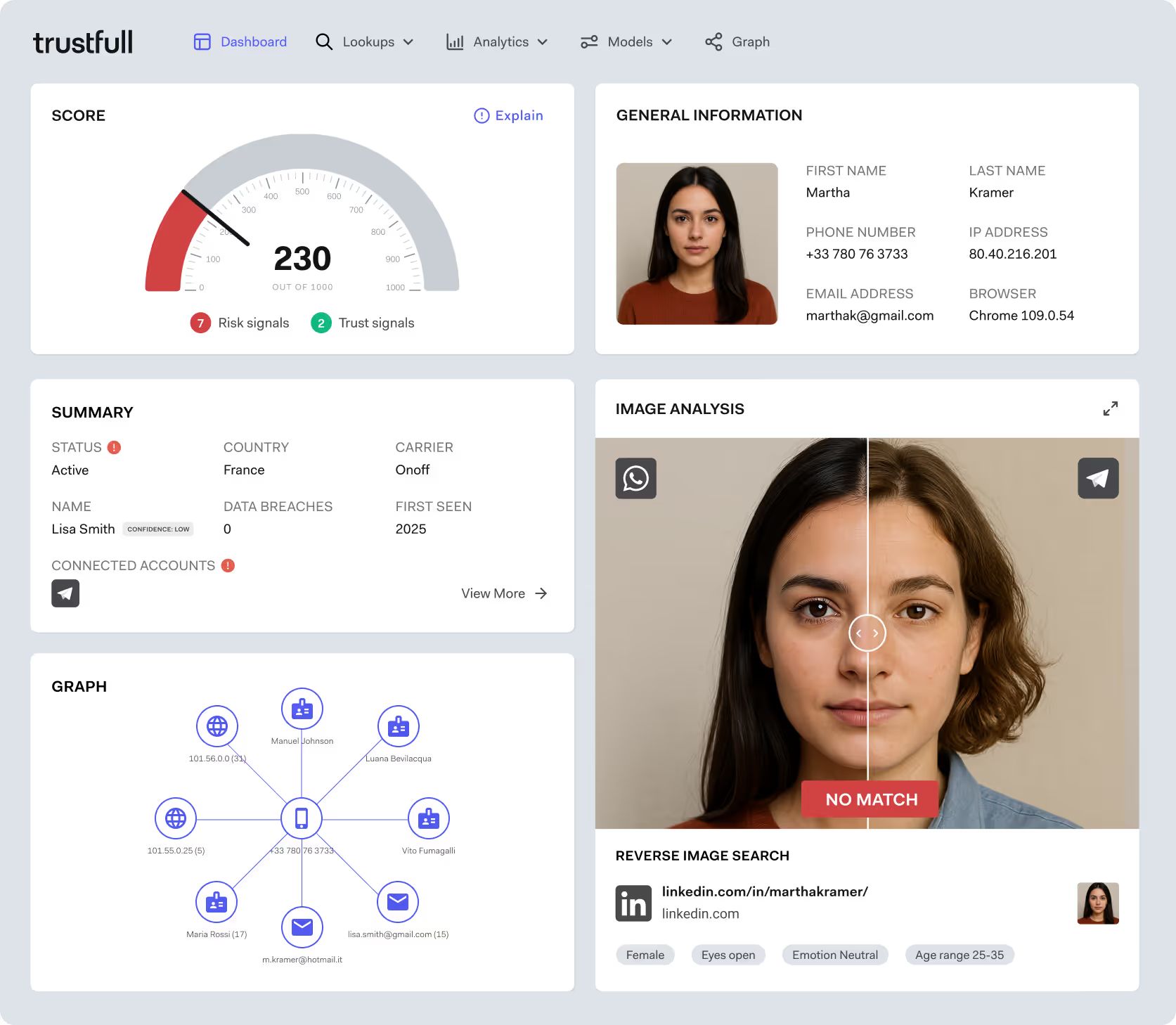

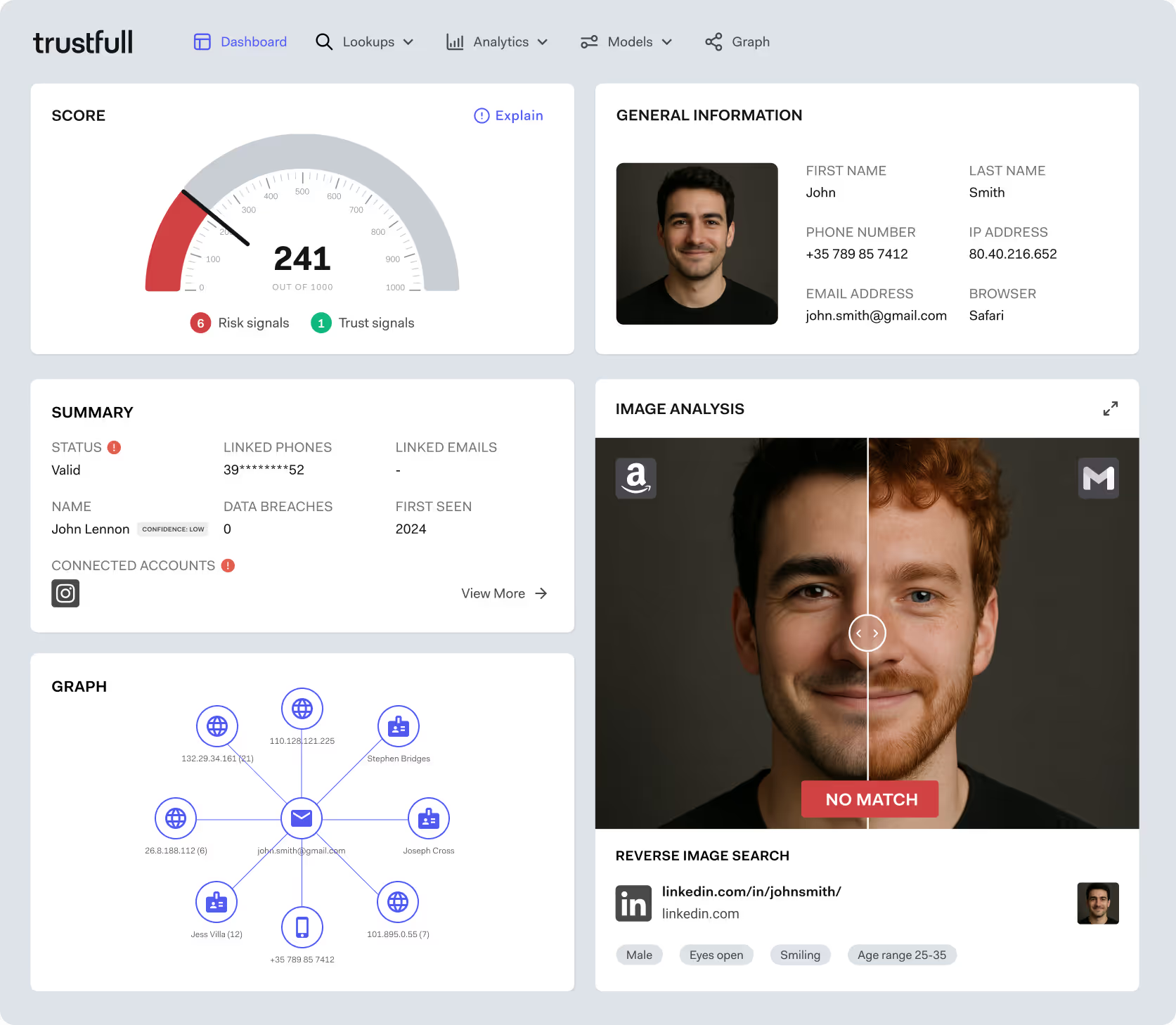

At first glance, nothing seems off. But inside the Trustfull dashboard, a different picture unfolds.

Phone metadata shows the number was issued just two days ago and has already been ported once — both signs of burner behavior.

Email analysis reveals a newly registered domain with no social presence or historical usage.

Connection data flags the signup as coming from a known proxy range associated with prior fraudulent attempts.

None of these signals alone would trigger alarm bells. But together, they produce a high-risk composite score, visible in real-time through a clean, explainable interface. Trustfull highlights the reasoning behind each flag, allowing fraud teams to act fast or pass the user through additional checks if needed.

This cross-referencing doesn’t rely on intrusive data collection or manual reviews. It works silently in the background, during signup, without adding any extra steps or friction for legitimate users. In a world where fake accounts can bypass surface-level validation, contextual correlation of digital signals is the fastest, most accurate way to spot risk before it becomes a problem.

What AI agents mean for detection

The landscape of fake accounts is evolving fast. What began with obvious spam and copy-paste fraud has morphed into something more elusive: automated identity generation and AI-powered sign-up flows. In this next phase, the threats aren’t just coming from humans pretending to be someone else. They’re coming from machines pretending to be people.

Legitimate businesses are already experimenting with AI agents that create accounts, book services, and interact with digital platforms on behalf of real users. These agents aren’t malicious; they’re designed to simplify experiences, especially in sectors like travel, e-commerce, and online banking.

But here’s the challenge: not all AI agents are benign.

While trusted developers with well-defined use cases build some, others are disguised automation scripts scraping platforms, testing attack surfaces, or mass-creating accounts with stolen or synthetic data. This convergence of fake accounts and AI automation makes detection harder than ever.

That’s why platforms need to move beyond a binary view of traffic, not all automation is bad, and not all human-like behavior is good.

To futureproof account creation, businesses will need to distinguish between:

- Malicious bots creating fake accounts at scale

- Legitimate AI agents acting on behalf of real users

- Real users using automation to enhance speed or anonymity

This is where frameworks like KYA (Know Your Agent) come in - built to assess whether a non-human visitor is a trusted actor or a threat (Related: Know Your Agent: How to Verify AI Agents at Scale).

Trustfull is already bridging this gap. Our platform analyzes digital signals not just for their surface value, but for the patterns they form over time; patterns that distinguish legitimate agents and real users from coordinated fraud attempts.

As AI agents become more embedded in how users interact online, the next generation of fake account detection will rely on real-time signal analysis, behavior profiling, and a new approach to identity: one that doesn’t care who you claim to be, but what your data says you are.

FAQs

1. Can fake accounts be created entirely without using stolen personal data?

Yes. Sophisticated fraudsters now generate synthetic identities using fabricated data that mimics real user behavior, making them harder to detect with traditional checks.

2. How do fake accounts evolve after creation?

They often remain dormant for a period, gradually building activity histories, like email usage or small purchases—to appear legitimate before executing fraud.

3. What signals are most effective in flagging fake accounts during onboarding?

A combination of phone number behavior, email history, and IP reputation gives early, silent insight into potential fraud without user friction.

4. Why are traditional KYC checks not enough to catch fake profiles?

Because they validate inputs at face value, fake accounts can pass if details look plausible. What’s missing is layered context from digital signals that reveal anomalies.