Article

How to Use Image Analysis to Strengthen Fraud Prevention

Uros Pavlovic

May 15, 2025

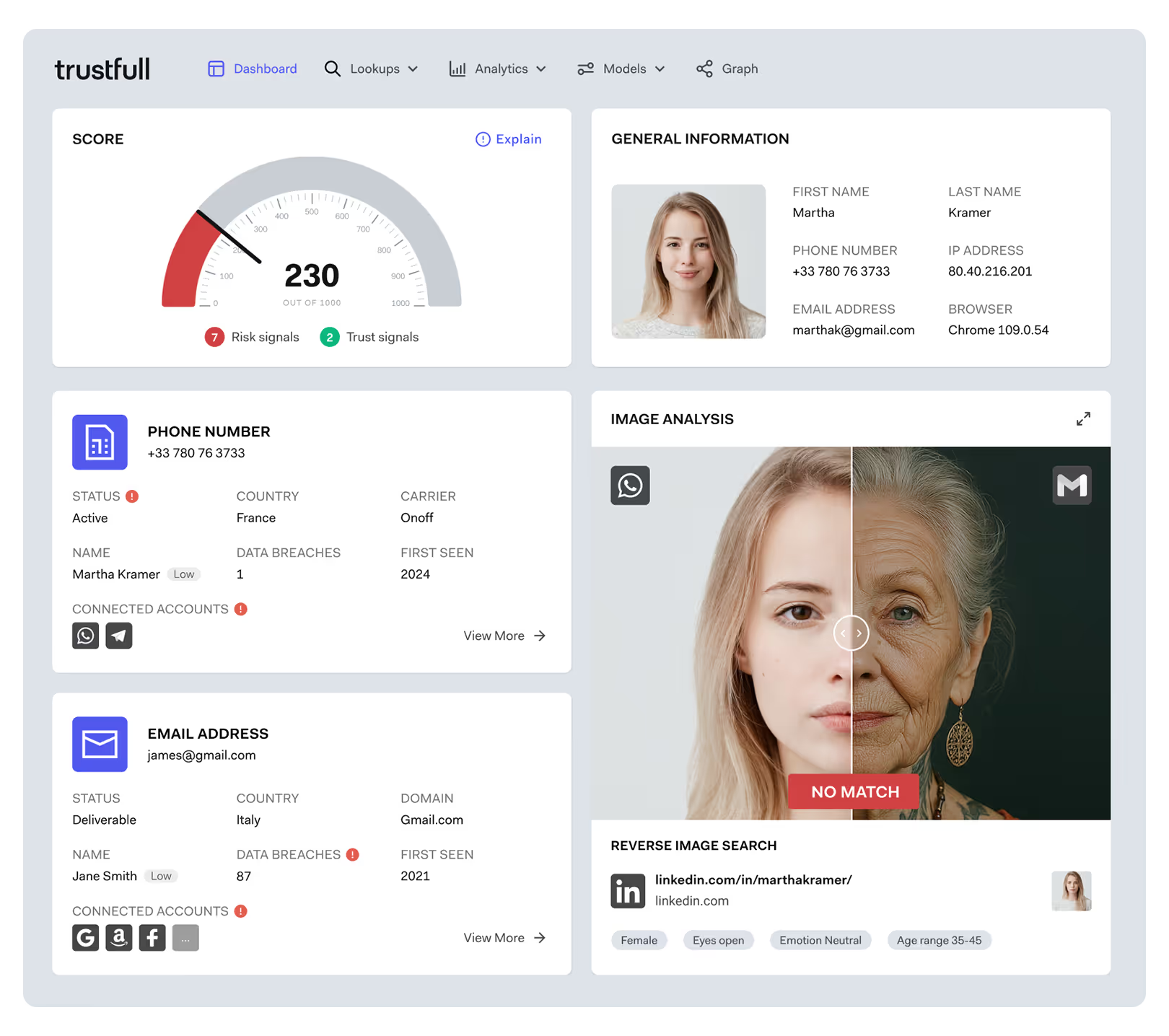

Financial fraud is getting harder to tackle. There is a wide range of evolving fraud tactics that continually threaten all types of businesses. Image analysis is now emerging as a critical tool for detecting various sophisticated scams. Image analysis focuses on evaluating visual elements, such as profile pictures and avatars, to identify inconsistencies and potential fraudulent activities. This approach is particularly effective during customer onboarding and when users update their account information, such as changing contact details. For instance, if a user updates their phone number, image analysis can retrieve associated profile photos to verify consistency with existing account information, potentially uncovering cases where legitimate accounts are repurposed for fraudulent activities like money muling.

The urgency of implementing advanced fraud detection methods is underscored by recent findings. A report by Resemble AI revealed that in the first quarter of 2025 alone, deepfake-driven fraud resulted in over $200 million in financial losses. Furthermore, it was reported that fraudsters relied on an AI deepfake to steal $25 million from UK engineering firm Arup.

What is image analysis in fraud prevention?

Image analysis in fraud prevention refers to the examination of visual elements associated with user accounts (such as profile photos and avatars) to detect signs of fraudulent activity. This process involves assessing the originality of images, identifying inconsistencies, and cross-referencing visual data with other digital signals to evaluate the legitimacy of an account.

Unlike biometric security measures that rely on government-issued IDs or facial recognition systems, image analysis focuses on publicly available images to identify anomalies. For example, the use of stock photos, celebrity images, or AI-generated faces in user profiles can be indicative of synthetic identities or fraudulent intent.

The significance of image analysis is amplified by the rapid increase in deepfake-related fraud. According to Signicat, fraud attempts involving deepfakes have surged by 2,137% over the past three years, with financial institutions being primary targets. These sophisticated forgeries often evade traditional detection methods, making image analysis an essential component of a comprehensive fraud prevention strategy.

Face match and consistency checks

In fraud prevention, face matching involves evaluating the consistency between a user's profile image and other digital signals, such as email, phone number, and device metadata, to detect potential anomalies. This process doesn't rely on biometric identification but rather on assessing whether the visual elements align with the associated account information.

For instance, if a profile picture is a stock image or an AI-generated face, and it's linked to an email address with a recent creation date or a phone number with limited usage history, these discrepancies can raise red flags. Additionally, the reuse of the same image across multiple accounts or the use of celebrity photos can indicate fraudulent activity.

The increasing sophistication of AI-generated images, or deepfakes, has made it more challenging to detect such inconsistencies. However, when visual data is cross-referenced with metadata and other digital footprints, organizations can identify and mitigate fraudulent activities more effectively.

Detecting synthetic identities through image patterns

Synthetic identities are fabricated personas created by combining real and fake information, often used to commit fraud. One common tactic involves using manipulated or AI-generated images as profile pictures to lend credibility to these fictitious identities.

These images may exhibit subtle inconsistencies, such as unnatural facial features or mismatched lighting, which can be detected through careful analysis. Moreover, when these images are cross-referenced with other digital signals, like IP addresses, email domains, or phone number histories, additional discrepancies may emerge. For example, an account using a high-quality profile picture but linked to an email domain known for disposable addresses, or a phone number with no prior usage history, could be indicative of a synthetic identity.

What makes image-based signals unique among digital trust signals?

Most digital signals used in fraud detection, such as device ID, IP reputation, or phone age, are largely technical. Image-based signals, by contrast, engage a visual and contextual layer of identity, offering a different vantage point for assessing authenticity. Instead of detecting patterns in metadata alone, image analysis captures the intentionality behind presentation. It answers: “How is this user choosing to appear?” and “Does that visual identity align with their digital behavior?”

For example, a freshly created account featuring a high-resolution professional headshot may seem polished, but when paired with an email linked to known spam activity or a phone number with no prior footprint, the visual presentation becomes a signal of suspicion rather than credibility. Tools that examine image originality, detect repeated use across unrelated accounts, and compare face attributes to the account’s declared region or behavioral traits can add layers of nuance that other signal types can’t provide.

This makes image data particularly helpful in identifying fraud attempts that are designed to pass basic technical checks but fail at the level of identity coherence.

Common fraud tactics involving image manipulation

Fraudsters continuously adapt, and image-based deception has become more nuanced than simple stock photo use. Today, image manipulation tactics include:

- Recycled avatars: identical profile pictures appearing across dozens or hundreds of unrelated accounts, often linked to affiliate abuse or spam networks.

- Morphed or blurred photos: slight alterations made to bypass duplicate image detection while retaining the same fabricated persona.

- Celebrity impersonation: use of public figures’ images in low-risk environments where the fraudster assumes the victim won't notice or care.

- GAN-generated faces: artificially generated faces from generative adversarial networks (GANs), which mimic realistic human portraits but often contain telltale artifacts, like mismatched earrings or warped backgrounds.

- Synthetic diversity: fraud rings may intentionally cycle between different gender or ethnic visual cues across fake accounts to avoid detection by static pattern-matching tools.

These tactics all serve the same purpose: to create plausible-looking accounts that blend into a platform’s ecosystem. But with targeted image analysis, such manipulations can be flagged not by appearance alone, but through frequency, context, and alignment with the user’s other digital attributes.

Face matching vs. face recognition: what’s the difference?

The terms "face matching" and "face recognition" are often used interchangeably, but in fraud prevention, their functions are distinct, and understanding the difference is essential for both compliance and implementation.

Face recognition typically refers to the process of identifying or verifying a person’s identity by comparing their face against a database of known identities. It’s commonly used in border control, law enforcement, or secure biometric authentication. This process raises significant privacy concerns, regulatory scrutiny, and often involves government-issued IDs.

Face matching, on the other hand, is used to assess the internal consistency of a user’s visual identity. It doesn’t aim to find a match in an external system but instead evaluates whether a profile picture or uploaded image aligns with the behavioral and digital traits already associated with the user; such as whether the same image appears across multiple unrelated accounts, or if the visual presentation suddenly changes after a contact detail update.

For platforms concerned with synthetic identity fraud or account repurposing, face matching provides a low-friction, privacy-compliant way to evaluate trust without performing invasive biometric analysis.

When does image analysis add real value in digital onboarding?

Not every user journey calls for intensive image scrutiny. But in environments where digital trust needs to be established quickly, like fintech apps, crypto exchanges, or online lenders, image analysis becomes a signal that can add strategic depth to onboarding decisions.

Initial sign-up is one obvious touchpoint, especially in cases where users upload a profile picture or avatar. However, one of the more overlooked yet high-risk stages is profile updates. When users change their email address or phone number, the system may still consider the account "verified" based on previous behavior. But if image analysis tied to the newly provided contact information reveals a radically different profile image, or one reused in a known fraudulent account, it can be an early indicator of account takeover or a legitimate profile being sold on the black market.

This approach doesn’t need to rely on facial comparison alone. Metadata, image type, and origin patterns can all be evaluated in the background without disrupting the user experience. When synchronized with email and phone intelligence, image signals can help detect inconsistencies that might otherwise go unnoticed until after fraud has occurred.

Image signals as part of digital footprint analysis

Image analysis gains its full utility when it functions not as an isolated signal, but as part of a wider assessment of a user’s digital footprint. This process can be simply described as a combination of out-of-sync data points that expose deception. A platform evaluating a new sign-up or an account update can cross-reference a profile image with the user’s digital context:

- Is the phone number recently activated or previously tied to suspicious behavior?

- Does the email domain suggest disposability or bulk account creation?

- Is the browser environment aligned with the declared region or device characteristics?

Now add the image signal: if the profile picture is linked to multiple other accounts with divergent data or appears on accounts with expired or mismatched credentials, the probability of fraud increases significantly. What platforms like Trustfull do is coordinate these checks through real-time orchestration. Rather than treating image input as a static verification step, it becomes part of a continuously evaluated identity signal stack; scored, weighted, and assessed alongside phone, email, IP, and device data.

This compositional view of identity is what allows fraud teams to respond intelligently to edge cases. The key factor is to identify patterns of inconsistency that are otherwise easy to overlook when each element is analyzed in isolation.

The growing importance of image intelligence in fraud detection

Visual identity signals were once considered secondary in fraud prevention. Today, they’re gaining ground as frontline indicators, especially in digital environments where users aren’t physically present and accounts can be generated at scale. As fraudsters adopt more advanced tactics, from AI-generated personas to profile-stuffing schemes, organizations need tools that can pick up on deception beyond surface-level data.

Image intelligence serves as a very efficient method against polished synthetic accounts that pass traditional verification checks. A fake user might clear email and phone checks, yet still raise suspicion when their avatar appears across dozens of unrelated sign-ups or carries subtle digital fingerprints consistent with deepfake generation.

Platforms such as Trustfull have adopted this approach by offering face match and image signal capabilities. These features are designed to integrate with other digital signals, not as biometric tools, but as intelligence inputs that add context, continuity, and scrutiny to identity evaluations.

Learn how you can expose mismatches, highlight unusual behaviors, and enrich scoring systems that decide whether to approve, flag, or reject a user.

FAQs

Is image analysis for fraud prevention compliant with data privacy regulations?

Yes, image analysis used in fraud detection examines publicly available profile images, such as those associated with open email accounts. It does not access or process private media from encrypted messaging apps or locked social platforms.

Can platforms detect whether an uploaded image is AI-generated?

Many systems can identify AI-generated content by analyzing anomalies in pixel structure, metadata inconsistencies, or known GAN (Generative Adversarial Network) signatures. These clues often appear when a user attempts to pass off a synthetic face as genuine during account creation or modification.

Does image analysis slow down the onboarding process?

When implemented correctly, image signals are evaluated passively and in real time, without requiring user input or additional steps. The process is designed to enhance fraud checks without introducing friction into the user experience.

Are there risks of false positives in image-based fraud detection?

Yes, particularly if platforms rely solely on visual cues without correlating them with other digital signals. That’s why image analysis is most effective when interpreted as part of a broader risk scoring system.

Is image analysis only useful at sign-up?

No—it's especially valuable during account changes, such as when users update contact details or show signs of takeover. These events often go unchecked by traditional systems but can be critical windows for detecting fraud.